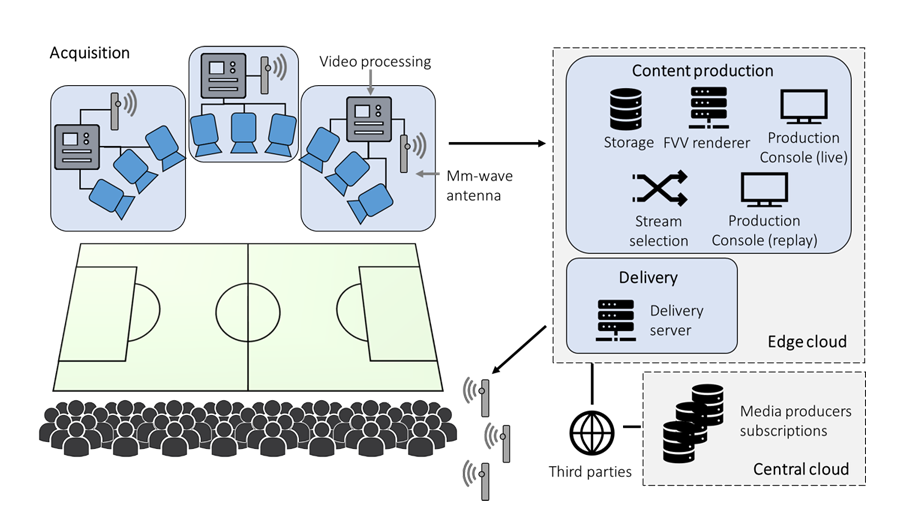

Use Case 1: Live audio production

In a typical live audio production setup, performers are equipped with Programme Making and Special Events (PMSE) equipment such as wireless microphones and in-ear monitoring (IEM) systems. UC1 aims to deploy a local 5G wireless high-quality ultra-reliable and low-latency audio production network based on standalone NPN (SNPN) by designing a NR-Redcap audio device protype. Latency is one of the main challenges for the professional audio use cases as well as reliability, synchronicity and spectral efficiency. In this regard, UC1 will focus on meeting a stringent round-trip latency requirement of 4 milliseconds (from microphone to IEM going through live audio processing tools and other network elements). Using 5G as a system-based approach will result in a reduction of todays efforts to handle remote production and spectrum access.

This use case contains 4 main areas of a wireless scenario:

- Capturing of live audio data

Producing and capturing of a live event for further exploitation involves many wireless audio streams.

- Temporary spectrum access

Each wireless application during the whole production time inside the studio requires different spectrum occupancy setup and different spectrum access, respectively.

- Automatic setup of wireless equipment

After receiving a grant for the useable spectrum, setup and configuration of all wireless PMSE equipment is done automatically.

- Local high-quality network

A typical studio setup is limited in coverage and number of wireless devices (UIs).

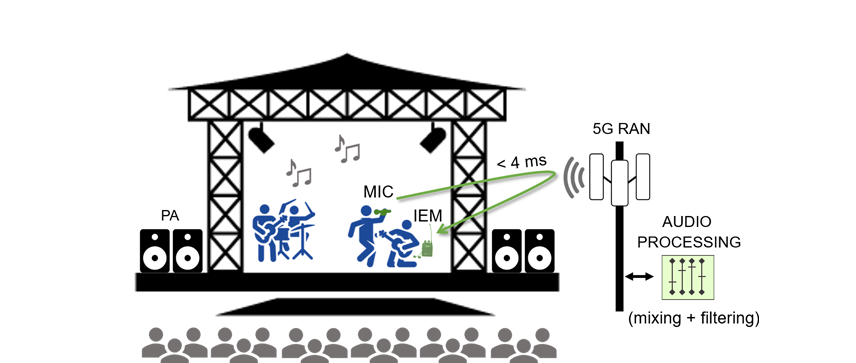

Use Case 2: Multiple camera wireless studio

This use case is based around multi-camera audio and video production in a professional environment. It will aim to replicate existing technologies such as COFDM radio cameras in terms of performance and capabilities using 5G technology. Furthermore, UC2 focuses on exploring multi-location scenarios with production facilities local to an event as well as remote and distributed production models. In some additional scenarios, it is expected to integrate 5G based contribution solutions using different types of network configuration to provide contribution links into production centers.

This will create opportunities for media companies to face the continuous challenge of producing more content with less resources along with the automation of some of their processes, reaching new ways to increase efficiency and effectiveness in production. The wireless IP component, based on 5G, is key to improve technical and operational efficiency, increase flexibility and reduce production cost.

Under this scenario, 5G NPNs play a key role to enable a self-operated environment not dependent on the network conditions of any underlying MNO. The scenario also envisages the potential of making 5G-enabled equipment able to be transparently used under NPN and public networks and even move between them seamlessly during productions and while continuing transmitting, thus maximizing the interoperability between different systems and components with the commonality of an IP-based infrastructure.

This use case also contemplates the deployment of an outdoor production scenario with the additional deployment of two or more 5G-enabled cameras and sound capture devices still connected to the NPN, which acts as an appendix of the indoor TV studio. In here, cameras will be controlled from the broadcast centre located in the studio. Multiple TV cameras, microphones, intercom systems and monitoring devices (provided by Sennheiser) will be connected over radio links to the 5G gNB or using device to device direct communications.

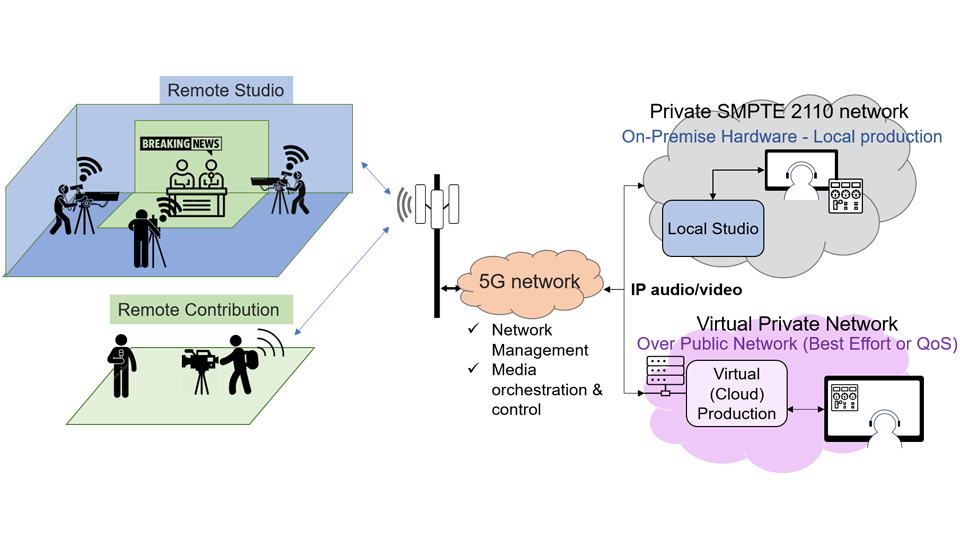

Use Case 3: Live immersive content production

This use case considers a real-time, end-to-end, Free Viewpoint View (FVV) system that includes capturing, 5G contribution, virtual view synthesis on an edge server, 5G delivery and visualization on users’ terminals. The system will generate in real-time a synthesized video stream from a free-moving virtual position.

FVV technology is able of providing immersive video experiences which allows the user to freely move around the scene, navigating along an arbitrary trajectory as if there were a virtual camera that could be positioned anywhere within the scene. This is possible thanks to a multi-camera capture of the scene that allows to estimate its geometry and thus, FVV technology is able to render other points of view of the scene despite there are no actual cameras on them (virtual views).

FVV technology aims at taking advantage of 5G features and functionalities in order to take a step forward in terms of flexibility and portability. 5G connectivity will allow a portable FVV system to operate in real time with a very reduced deployment cost and high flexibility. The incorporation of 5G into the FVV pipeline will allow the distribution of the computational load, thus paving the way for possible future service virtualisation. Moreover, the extensive use of 5G for subsystem interconnection will allow the synchronization of all involved elements and the remote control and operation of the live immersive service. Besides, all system interfacing and control aspects will be handled by the 5G network.

The envisaged use case targets, among other possibilities, the real-time immersive capture of sport events such as a basketball game. It will be possible to reproduce content both live and offline (replay) of free-viewpoint trajectories around one basket of the court. The content can then be distributed not only to people attending the event (local delivery), but also to third parties. The most innovative part of this use case is the fact that each user can access a specific selected angle live, since all possible angles are available at any time.